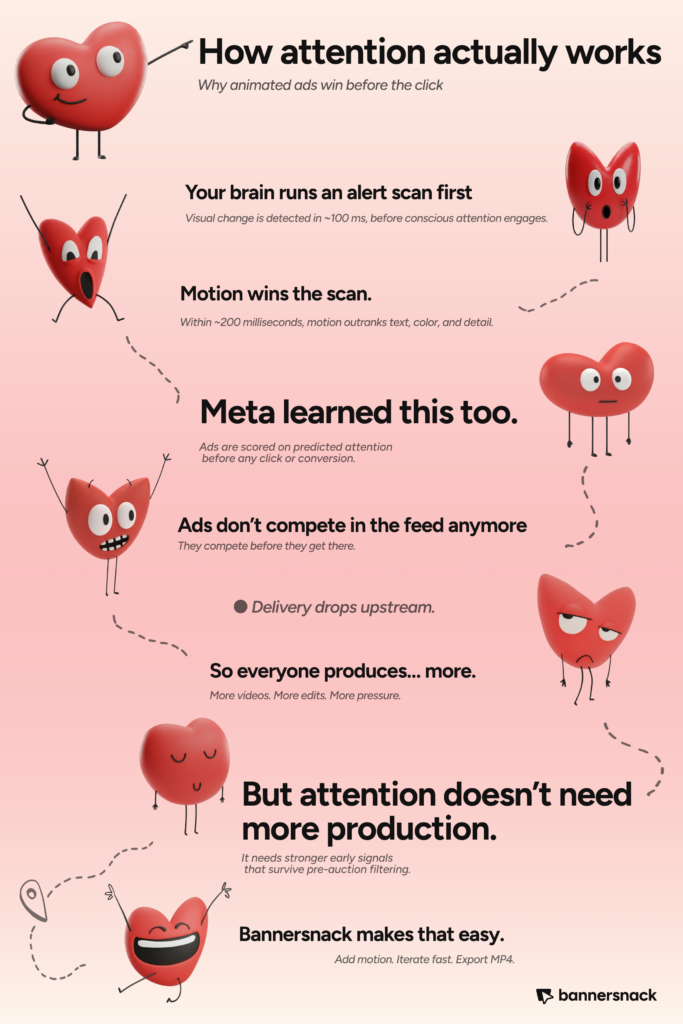

You have less than one second to win attention with a social media ad, and not just because people scroll fast. Meta’s delivery infrastructure now makes a prediction about your creative before any human ever sees it, evaluating whether your ad is likely to hold attention and quietly excluding it from delivery if the prediction comes back weak. You won’t find a rejection notice or an error message anywhere in your dashboard, just fewer impressions, slower spend, and a creeping sense that something changed but you can’t quite pinpoint what.

This article unpacks what actually shifted inside Meta’s ad delivery system, why animated creatives consistently send stronger signals through that infrastructure, and how teams can produce motion-based social media ads quickly without spinning up a video production pipeline. We’ll get into the technical layer (a system called Andromeda and a learning model called GEM), take a geeky detour into the neuroscience of why human brains are hardwired to notice movement before anything else, and then cover the practical side of making all this work without losing your timeline or your sanity. If you’d rather watch than read, we covered this topic in a YouTube video here.

What Changed Inside Meta’s Ad Delivery

Most advertisers who notice declining reach or rising CPMs respond by adjusting targeting, testing new copy, or increasing budgets. These are reasonable instincts, but they address the wrong layer of the problem. Meta didn’t just tweak how ads get matched to audiences. It made a structural change to how ads qualify for delivery consideration in the first place.

The system now runs every ad through a pre-auction consideration layer called Andromeda. Here’s what you need to know about how it works:

- Andromeda evaluates ads before the auction even starts. At massive scale and extremely low latency, it determines which ads are worth considering for a specific person, on a specific surface, at a specific moment. This happens before bidding logic engages, before optimization kicks in, and before any user has the opportunity to interact with the creative.

- It filters ads out, not in. The majority of ads never make it past this stage. If your creative doesn’t send strong enough predicted attention signals, it gets excluded from the consideration set entirely.

- Andromeda isn’t brand new, but most advertisers are still catching up to it. It’s been part of Meta’s infrastructure for a while, but it has been rolling out with increasing scale and sophistication over recent quarters. The system evolved significantly while many ad teams were still optimizing for a delivery model that no longer fully applies, which is a big part of why recent performance shifts feel so disorienting.

- The filtering is completely invisible in your reporting. When Andromeda excludes your ad, nothing in your dashboard flags it. You see the downstream symptoms instead: slower spend, fewer impressions, rising CPMs, and declining delivery that looks like audience fatigue but actually originates in a creative signal problem upstream.

This is why “testing more audiences” often doesn’t help. The constraint isn’t about who sees your ad. It’s about whether your ad gets invited to the consideration set at all. Andromeda makes that call based on predicted attention signals from the creative itself, not from your audience parameters.

The Learning System Behind the Filter

Andromeda doesn’t make its filtering decisions based on simple rules or static thresholds. Behind it sits GEM (Generative Ads Recommendation Model), Meta’s foundation model that teaches the entire ad delivery system how to interpret creative content and behavioral signals.

GEM is the learning layer, not a delivery mechanism. It absorbs patterns from trillions of interactions across Meta’s full ecosystem, including Instagram, Facebook, Messenger, and Business Messaging, then propagates that understanding to all downstream models powering ad delivery. Andromeda is one of the primary beneficiaries of that propagation.

GEM processes three categories of input simultaneously:

- Sequence features that capture a user’s activity history across organic content and ad interactions over extended time periods

- Non-sequence features including user and ad attributes such as location, ad format, creative type, and device context

- Cross-feature interactions that map how specific user behaviors correlate with particular ad characteristics across different surfaces and contexts

That multi-dimensional learning means GEM doesn’t just develop broad patterns like “this demographic clicks fitness ads.” It builds granular, individual-level behavioral understanding, learning for instance that a particular user consistently engages with motion-based product demonstrations in Stories but scrolls past carousel formats in Feed. That level of behavioral resolution feeds directly into how Andromeda scores and filters ads for delivery.

According to Meta’s engineering blog post “Meta’s Generative Ads Model (GEM): The central brain accelerating ads recommendation AI innovation”, GEM delivered a 5% increase in ad conversions on Instagram and a 3% increase on Facebook Feed in Q2 2024 following its initial launch. By Q3 2024, architectural improvements had doubled the performance benefit derived from each incremental addition of data and compute. That compounding efficiency matters because it means GEM is continuously getting better at teaching Andromeda which creative signals predict sustained attention, and correspondingly tightening the filter on ads that don’t generate those signals.

How Creative Format Becomes a Delivery Signal

The relationship between GEM and Andromeda creates a direct connection between your creative format and your delivery outcomes. Here’s the mental model that makes it practical:

Andromeda decides whether your ad gets considered. GEM shapes how the signals within your ad are interpreted and valued.

Andromeda needs fast, early indicators to determine whether an ad justifies a delivery slot. GEM improves Meta’s ability to read those indicators across formats and surfaces. Animated creatives produce richer behavioral data points at every moment of the viewing experience, including watch time, completion rate, interaction timing, and scroll-pause duration. These data points give GEM much more material to learn from compared to the relatively sparse engagement data generated by formats without motion.

That learning then gets shared across Feed, Stories, and Reels through what Meta’s engineering team calls the “InterFormer” architecture, a system designed to preserve full sequence information while enabling cross-feature learning. When GEM learns that a particular animated creative drives engagement in Feed, it can use those patterns to improve delivery predictions for the same creative in Stories and Reels, creating a cross-surface optimization loop that benefits the advertiser without requiring separate assets for each placement.

The practical takeaway is that creative has shifted from being the thing people see after delivery decisions are made to being one of the primary inputs that shapes those delivery decisions. More creative variations in this system don’t create noise; they create learning signal. And the density of that signal is heavily influenced by format.

A Brief Geeky Detour Into Why Your Brain Notices Movement First

This is where things get genuinely interesting, because the reason motion generates stronger attention signals in Meta’s systems has almost nothing to do with Meta and everything to do with how the human visual cortex developed under evolutionary pressure.

Andrew Huberman, the neuroscientist behind the Huberman Lab podcast, frequently explains that human vision is not a passive process. Your brain runs a constant, aggressive prediction engine trying to anticipate what’s about to happen in your visual field. Here’s why that matters for advertising:

- Your brain processes change before it processes detail. Movement gets detected by older, faster neural pathways (the magnocellular system) before your conscious mind has assembled enough information to form an opinion about whether something deserves attention. Detail, color, and fine pattern recognition arrive later through separate, slower pathways (the parvocellular system) that require meaningfully more cognitive effort.

- This wiring exists because motion detection was a survival mechanism. For most of human history, quickly spotting movement was the difference between finding food and becoming food. The ancestors who noticed rustling in the grass fast enough survived to have descendants. The ones who preferred to carefully evaluate the entire visual scene first are, regrettably, not anyone’s ancestors.

Everyone reading this inherited a visual system that is a motion-detection machine first and a detail-processing machine second. The fact that it now fires reliably when a product image slides into frame on an Instagram Story is an unintended evolutionary side effect, but a very real one for anyone running social media ads.

GEM reached the same conclusion independently, though not through neuroscience. It got there by observing billions of people scrolling through content and consistently pausing on things that move. The biological wiring and the algorithmic learning converged on the same insight from completely different starting points: motion predicts attention.

Inside Meta’s delivery system, this creates a compounding loop. Motion catches human attention, which generates behavioral signals. GEM learns from those signals that motion-based formats correlate with higher attention. Andromeda incorporates that learning and assigns higher scores to motion-based creatives. The ad receives more delivery, which generates more data, which reinforces the pattern further. The loop keeps turning, and it favors motion at every stage.

What “Animated Ads” Actually Means in Practice

When most people hear “animated ads,” they immediately picture a full production workflow involving scripting, filming, editing, sound design, and a rendering queue that finishes sometime next Thursday. That level of production absolutely has its place, but it is not what generates better signals for GEM and Andromeda, and it’s not what the rest of this article is about.

Animated ads, in the context of Meta’s delivery optimization, means adding light, intentional motion to otherwise standard creative layouts. The starting point is an ad you might already have: a headline, a product image, supporting copy, and a CTA button. The animation layer adds structured transitions on top of that foundation:

- Text elements fade in sequentially so the viewer reads them in the intended order rather than scanning randomly

- The product image slides into position or zooms subtly to draw the eye

- Background elements shift to guide attention through the visual hierarchy

The full animation typically runs three to six seconds and loops cleanly. The result is an MP4 file that works natively across Meta placements, delivering the attention signals Andromeda prioritizes and giving GEM richer behavioral data to learn from, all without needing a video editor, a storyboard, or anyone uttering the phrase “can we get just one more take.” The animation is structure and visual hierarchy, not entertainment or spectacle.

Why MP4 Travels Best Through GEM’s Learning Architecture

MP4 stands out as the most reliable creative format for Meta delivery because it directly supports how GEM transfers learning across placements and surfaces.

GEM’s architecture runs what Meta describes as “domain-specific optimization” alongside cross-surface learning. When an animated ad generates strong engagement signals in Feed, GEM uses those signals to improve delivery predictions for the same creative in Stories and Reels. This cross-surface transfer works most effectively with motion-based formats because they produce the behavioral attention signals that propagate through GEM’s learning model with the highest fidelity.

Different surfaces do reward different motion characteristics in practice:

- Feed placements tend to favor clear visual hierarchy and paced reveals, with text appearing in deliberate sequence, products entering the frame purposefully, and CTAs arriving after the value proposition has been established

- Stories and Reels generally prioritize faster motion, vertical framing, and more immediate visual hooks

A single well-structured MP4 can generate productive signals across all three surfaces when the animation follows sound structural principles: centered subject matter, simple transition patterns, and loopable pacing that doesn’t feel jarring on repeat.

The InterFormer architecture within GEM preserves full sequence information while enabling cross-feature learning, which means the system extracts meaningful behavioral signals from short, looping animations just as effectively as from longer narrative video content. Three to six seconds of structured motion that communicates visual hierarchy clearly provides sufficient signal density for GEM’s learning. Fifteen-second story arcs are not required.

How Real Brands Use Motion as Structure, Not Spectacle

Looking at how established brands approach animated social media ads reveals a consistent pattern: motion is an information architecture tool, not an entertainment vehicle or a decorative afterthought.

Notion runs Meta ads featuring a product interface with subtle, purposeful transitions where pages slide into view, checkboxes animate to the completed state, and text blocks appear in the sequence a new user would naturally discover features. The motion is hierarchy, not decoration. It guides the viewer’s eye through the value proposition without narration, jump cuts, or dramatic soundtrack, and gives GEM clear structural signals about which visual elements matter in what order.

Grammarly relies on text-based animation almost exclusively, with misspelled words highlighting, corrections sliding into place, and writing score counters incrementing upward. The entire ad consists of typography and light motion with zero video footage, yet it communicates the product’s core function within about three seconds because the animation reveals the benefit progressively rather than presenting everything at once.

Headspace animates simple illustrations with breathing indicators expanding and contracting, meditation timers counting down, and abstract shapes morphing in gentle rhythms. The pacing is deliberately calm and unhurried. Headspace uses motion to set an emotional tone that matches the product’s brand promise. The animation itself becomes the brand experience, not a container for marketing copy.

None of these are big-budget productions, and they aren’t cinematic or technically demanding to produce. They represent smart applications of motion placed at the exact moment where attention gets decided.

Light Animation Versus Full Video Production: When Each Approach Fits

The choice between light animation and full video production depends on what the creative needs to communicate and what production resources are realistically available on a recurring basis.

| Dimension | Light Animation (Animated Banners/Ads) | Full Video Production |

| Best suited for | Product features, promotional offers, app interfaces, e-commerce showcases, seasonal campaign refreshes | Brand storytelling, customer testimonials, complex demos requiring narration, emotional campaigns |

| Production time | Minutes to hours per asset, with 10+ variations achievable in a single working session | Days to weeks per asset, requiring scripting, filming, editing, and review cycles |

| Team requirement | A marketer or designer using a banner animation tool, with no video editing expertise needed | Video editor, potentially a scriptwriter, potentially on-camera talent or voiceover artist |

| Cost structure | Software subscription with minimal incremental cost per additional variation | Per-project costs for talent, equipment, editing time, and revision rounds |

| Testing velocity | High, allowing teams to produce and test multiple headline, image, or offer variations rapidly | Low, where each meaningful variation requires a separate production cycle |

| Signal quality for GEM | Strong, as structured motion creates clear behavioral signals that transfer across surfaces | Strong but dependent on whether the video holds attention within the first one to three seconds |

| Cross-placement adaptability | High, where one MP4 with centered subject and loopable pacing works across Feed, Stories, and Reels | Moderate, where aspect ratio, pacing, and hook timing frequently need surface-specific adjustment |

| Where it falls short | Complex narratives, emotional storytelling, testimonials, and demos requiring spoken explanation | High-frequency campaign refreshes, multi-variant testing, and teams without dedicated production capacity |

For most performance advertising scenarios, light animation delivers the creative signals Meta’s systems reward while operating at production speeds that match how campaigns actually run. Full video production remains the right call for specific objectives, but defaulting to it for every campaign creates a bottleneck that limits both testing volume and creative freshness.

Making Animated Social Media Ads Without a Production Pipeline

Understanding that animated creative sends stronger signals through Meta’s delivery systems is one thing. Actually producing animated ads at the speed campaigns require is a different problem, and it’s the one most teams get stuck on.

Bannersnack was built for this gap. It launched in 2008 as a dedicated online banner maker, and after a period of sustained user demand it was brought back as a standalone product focused specifically on display and social ad production. The core workflow is banner design: you build layouts from templates or from scratch, set up typography, images, and CTAs, then layer in animation using built-in controls for fades, slides, zooms, and sequenced text reveals. You can export as MP4 for social placements, HTML5 for display networks, or GIF, PNG, JPG, and PDF depending on where the ad needs to run.

The animation capability is a natural extension of the design workflow rather than a separate discipline. You’re designing a banner and then deciding which elements should move, in what order, and with what timing. That keeps production accessible enough for a marketer or designer to handle without specialized motion graphics skills.

In practice, this means a static ad that’s already designed can gain the motion layer Andromeda’s filtering rewards in the same workspace where it was built, exported as MP4 in minutes rather than days. And because Bannersnack includes Smart Resize and template-based production, teams can generate multiple animated variations across sizes and formats in a single session, giving GEM the creative diversity it needs to optimize delivery efficiently.

We’re currently exploring a new set of animation presets built around this attention-first approach to digital ads.

The Filter Will Keep Getting Tighter

Meta’s published roadmap for GEM includes expanding its training across the full ecosystem, covering all user interactions with organic and advertising content across text, image, audio, and video modalities. As the system learns from more data with increasingly diverse signal types, its predictions about which creatives hold attention will keep getting sharper.

Meta’s engineering team describes future priorities including “inference-time scaling” for compute optimization and “agentic, insight-driven advertiser automation.” In plainer language, the pre-auction filtering will become more precise and more aggressive over time, with Andromeda getting better at excluding ads that don’t demonstrate strong predicted attention potential and GEM getting better at identifying which creative characteristics reliably predict that attention.

The direction is structural rather than cyclical. GEM gets smarter with each training iteration, Andromeda gets more selective as prediction models improve, and the filter tightens with no indication it will be loosening back up.

Frequently Asked Questions

Does this mean static ads don’t work anymore on Meta? Static ads still work, but they compete at a disadvantage in Andromeda’s filtering because they generate weaker attention signals. They can still perform when the offer or targeting is strong, but the gap is widening as GEM’s predictions improve.

How long should animated ads be? Three to six seconds. Long enough to establish information hierarchy (headline, product, CTA in sequence), short enough to loop cleanly. GEM extracts meaningful signals from short animations just as effectively as from longer content.

Will this work for B2B ads or just e-commerce? Light animation works for anything that benefits from structured information hierarchy. B2B SaaS ads perform well with sequenced feature callouts, animated UI demos, and data visualizations that build over time. Notion and Grammarly are both B2B examples using this approach.

Do I need different animated ads for Feed, Stories, and Reels? Start with one MP4 and let GEM’s cross-surface learning show you where it performs best. Create placement-specific versions only after you have clear data showing different surfaces need different creative approaches.

How much does light animation cost compared to video production? A banner animation tool costs a fraction of video production, but the bigger difference is time. Ten animated variations in an afternoon versus weeks of scripting, filming, editing, and revision cycles.

What if my current static ads are still performing fine? Don’t abandon them, but start testing animated variations alongside them now. The performance gap will likely widen as GEM’s filtering evolves, and it’s better to be prepared than scrambling to adapt after the fact.

Attention Gets Decided Before Action

Meta’s delivery infrastructure now evaluates predicted attention before it evaluates predicted clicks or conversions. Ads that don’t generate strong early attention signals lose delivery at the pre-auction stage, before any human being has the chance to read the headline, evaluate the offer, or tap the CTA.

Andromeda determines whether your ad qualifies for delivery consideration. GEM shapes how your creative signals get interpreted across Meta’s learning systems. Structured motion helps both of those systems work in your favor rather than against you.

The underlying biology hasn’t changed and won’t be changing anytime soon. Human visual systems still prioritize motion detection before conscious evaluation, exactly as they have for a very long time. What changed is that Meta’s ad technology now mirrors that biological reality at platform scale, with GEM having learned the same attention hierarchy from billions of real user interactions and Andromeda filtering delivery based on those learned patterns.

Advertisers who adjust their creative production to match this attention-first model will see the benefits compound as the system improves. Those who don’t may start noticing small, persistent drops in delivery, without any obvious reason showing up in their reports.

P.S. The ironic part? While reading about attention signals, your brain was doing exactly what GEM learned from billions of users. You noticed the bolded text first, skimmed the table, and probably scrolled faster through the technical sections. Motion detection at work. Now go add some movement to your ads before Andromeda decides they’re not worth showing.